The paper’s relevance on the creation and implementation of robotic systems in the fleet has a connection with the change in reposturing of warfighting by weapons, military and special equipment of the Russian Federation (WMSE RF). It proposes that the strike and reconnaissance groups will represent the Russian navy. In these groups, the underwater and surface-based forces, as carriers of strike and detection weapons, command and control of these forces, will integrate by using network technologies into a unified information and control system. The elements of such a system should be: traditional objects of armed struggle (ships, planes, submarines, etc.) and robotic complexes (RTC) and systems.

The WMSE RF divisions that have the RTC group on their strength should be construed as a cyber-physical object. It is an information-related set of physical components, onboard measurement systems, on-board executive systems, onboard computer systems where there is the control algorithm implementation, and the control point with an information-control field. Such an object must have a self-sufficient behavior property that guarantees a certain mission fulfillment. It is possible to achieve the efficiency desired level of such systems and complexes mainly by directing the efforts of designers and scientists to improve the intellectual component of their control system: 1) a set of algorithms for onboard RTC control systems; 2) algorithms for the activities of operators who control the RTC. Together, they form the RTC "corporate intelligence" [1, 2, 8], which allows for the mission implementation in accordance with the RTC functional purpose in an uncertain and poorly formalized environment.

RTC is an autonomous intelligent system (hereinafter is as an agent) that displays human-like behavior and has [1, 3, 4, 6]:

- onboard measuring devices (or a set of onboard measuring devices) that act as sensors that provide information about the environment state and its own one;

- onboard actuators (or a set of on-board actuators), by which the system acts on the external environment and on itself, acting as effectors;

- communication options with other systems;

- "cooperative intelligence", its components should be onboard computing tools, their software, operators that are the set of algorithms carrier for solving problems in the subject area, through studying and training.

The agent performs the assigned problems based on their status assessment, their understanding of the combat situation development, as well as the information received through the communication module. The agent can predict changes in the environment from its actions and evaluate their usefulness.

There are two directions for the development of efficiency and functionality for such systems: a) improvement of the functional and hardware components in WMSE RF promising samples; b) development of more advanced algorithms for performing problems by operators, transmitting them through studying and training.

One of the approaches to the formalization of autonomous intelligent systems is agent-oriented modeling [4–6], where the agent is a system that can adequately respond to changes in the external environment, which does not have built-in behavioral mechanisms. However, it should be noted that the vast majority of research in this area remains at the theoretical level. There is a gap between the primitive behavior models of artificial entities, for example, in combat robotics, their interaction models and the practice expectations. Improving the agent's capabilities in these areas individually is not enough. Therefore, developers 'efforts should be aimed at system integration of all onboard equipment subsystems and operators' intellectual potential by developing information technologies based on artificial intelligence algorithm models and network technologies [6-8].

At the present stage, there are the main problems when controlling an unmanned object with an onboard system: 1) processing information received from sensors; 2) means of influence application on changes in the external environment. The goal problems setting (strategy for changing the situation in a favorable direction) and choosing a rational way (tactics) to achieve the goal (decision-making) are the prerogative only of the human operator in remote the RTC control [9, 10].

Depending on the operator inclusion degree in the RTC control loop, there are three classes of intelligent systems [12, 13]:

- the "Situational awareness" system, which provides the control point (CP) with information sufficient for: 1) calculating situational assessments and building logical and informational models of the external and internal situation; 2) forming a knowledge base; 3) making decisions on how to act; 4) completing the goal-setting stage; 5) adjusting the knowledge base based on the results of RTC actions.

- expert systems that present real-time actions by the CP operator to achieve the mission goal, taking into account their situation understanding, and with sufficient depth of study for its subsequent implementation after approval by the operator [12].

- on-board intelligent control systems that combine the two stages listed above, able to operate without operator intervention and to communicate with them to get an assessment of the implemented session and adjust the knowledge base.

In intelligent systems, there are various inference mechanisms for choosing a rational method of action in various situations [3, 13].

Requirements for the autonomy and intelligence of cyber-physical systems

The automated systems’ role in the performance of combat problems should be presented from the point of view of their impact on humans. They should help the commander by simplifying and increasing the efficiency of his work. The commander must be the control system element (human in the loop control) of the system. Their interaction should ensure the transfer of experience both from the person to the machine and in the opposite direction, thereby ensuring adaptive behavior. This involves the research and development of systems containing so-called "cooperative intelligence". For example, the main difficulty for any autonomous system is recognizing situations in the environment. The complexity and multiplicity of situations that arise during the mission make it impossible to identify them based on the results of multiple tests and form a knowledge base based on them. Therefore, it is necessary to implement an additional monitoring scheme for the cyber-physical system to identify situation classes and action successful methods for the behavior model formation (patterns) based on data obtained as a result of real-world tests in on-line mode. This training process forms a meta-level, the main link of which is the commander of the control center with its headquarters.

This scheme guarantees a controlled evolution of self-sufficiency when solving problems by combat units that have autonomous work-complexes in their composition, and automated robotic systems with "intelligence" and autonomy various degrees become an organic part of future developments for combat use areas by various branches of the armed forces.

Initial guess and hypotheses

As a rule, situations that arise before an autonomous system are difficult enough for constructive formalization by traditional formal methods, but they are well described by means of natural language and there is their better resolution experience. The bearer of such experience is a leader. The leader experience has a transfer by communication means in the chosen language.

Let's assume that a person's experience / behavior should be construed as an interaction function between the situation and the person. A person's purposeful action depends on both the signs of the situation and their personal traits (the motivation degree, the ability structure, knowledge, etc.). A situation can be as a system component cause that generates its subjective reflection in a person. A person who chooses a certain behavior based on a subjective representation of the situation influences the situation by changing it. At the same time, the processes that occur in a person’s mind when performing certain actions lead to its structure expansion of abilities (knowledge, experience). In other words, a person’s stable traits that have a manifestation in their actions, through behavior and experiences, can affect the situation, changing it. Conversely, a situation can have the opposite effect on a person's stable traits when they interpret it and change their values, norms, acting ways, and experiences. The agent's behavior model should also take into account this interaction phenomenon between the RTC and the situation.

Note that the technology of using RTCs in accordance with the above assumes the development of at least three systems when solving the problem of their "intellectualization" [12]:

- Off-board intelligent systems for preparing the RTC for the current combat problem, which should: a) ensure that all the information necessary for the successful completion of the session (goals, problems, maps, communication algorithms, methods for obtaining corrections, etc.) is transferred to the RTC; b) training of the RTC escort crew;

- The second group consists of onboard intelligent control systems that ensure the performance of the combat problem in autonomous mode;

- The third group consists of intelligent systems for analyzing the RTC session results. Their work results are the basis for the effective accumulation and up-to-date patterns’ database, as well as the requirement formation for the RTC functional and hardware components.

When developing onboard intelligent control systems, the concept of "typical situation" (TS) turned out to be constructive. It is a functionally closed part of the RTC work with a clearly defined significant goal, which as a whole occurs in various (real) sessions, being specified in them according to the conditions of the flow and available methods for resolving problematic substitutions arising in the vehicle [3]. When the RTC is fully intellectualized, the vehicle and its modes of action, as a reaction to it, form an individual pattern of behavior.

Certain cognitive models of purposeful actions regulate an activity. These models include an idea of the time sequence for performing certain types of actions. By analogy, by implementing the action modes of the agent purposefully acts on objects in your environment. The basis for developing methods of action must be considered the agent's subjective ideas about the situation of a purposeful state [14]. We will assume that the observed activity of the agent is the program function developed by it, which are, in turn, the result of its inherent cognitive processes, and which serve with varying effectiveness degree to fulfill the problems set or achieve the desired results. Thus, the business plan and its implementation are the internal programming product. Its result is a program (algorithm) of actions that uses the operations available to the agent. It allows the agent to transform the observed situation into the desired (target) one by implementing it. The person evaluates the feasibility of the created algorithm using the linguistic variables "conviction" and "efficiency". The problem of identifying these programs should be solved by analyzing language patterns and nonverbal communication.

The RTC intellectualization should be implemented in the direction of its inclusion in the activity and corresponds with the operator (commander). Their behavior and actions must be understandable and correctable.

Definition . A pattern is the person activity result (a group of persons) associated with an action, decision-making, behavior, etc., carried out in the past and considered as a template for repeated actions or as a justification for actions based on this pattern.

The person conducting his experience exploration, aimed at its aggregation by creating pattern models. Therefore, the pattern model is a human experience unit for which, in a situation similar to the typical one (cluster), a person has formed a certain degree of confidence in obtaining the desired states. Pattern modeling has an execution with a limited subset of natural language, including case-based reasoning, which forms a specific part of the human experience – meta-experience.

A fuzzy description's model of a behavior pattern in a choice situation

Purposeful behavior is associated with the choice that occurs in a situation of purposeful state [8]. Let's consider the behavior pattern model in the form of a fuzzy description’s model in a choice situation. The author proposed to build a possible version of such construction by “paradigm grafting” of ideas transmitted from other Sciences [14], for example [15, 16]. The goal-oriented state consists of the following components:

- The entity carrying out the selection (the agent),

.

. - The environment of choice (S), which is understood as a set of elements and their essential properties, a change in any of which can cause or produce a change in the state of purposeful choice. Some of these elements may not be elements of the system and form an external environment for it. The impact of the external environment has the using variable description, some of which may remain unchanged for a certain time interval T, and some of which may change. The first type of variables is parameters, and the second is perturbations. The values of both types of variables are generally independent of the agent.

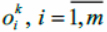

- Action available methods

– agent, that are known to it and can be used to achieve i-result (they are also called alternatives). Each method of this set has a characteristic by a set of parameters, which are called control actions.

– agent, that are known to it and can be used to achieve i-result (they are also called alternatives). Each method of this set has a characteristic by a set of parameters, which are called control actions. - Possible for environment S results are significant for the agent –

. Some set of tools called output parameters of the goal-oriented state situation help in evaluating the results.

. Some set of tools called output parameters of the goal-oriented state situation help in evaluating the results. - Method for evaluating the properties of the results obtained as a result of selecting the action method. It is obvious that the evaluation of the result should reflect the value of the result for the agent and thus reflect his personality.

- Constraints that reflect the requirements imposed by the selection situation on output variables and control actions.

- A domain model that represents a set of relations describing the dependence of control actions, parameters, and perturbations on output variables.

- Model limitations of the agent. It has a detailed description in [14]. The type regardless of the restriction description used, we will assume that the agent has a certain degree of confidence about the possibility of some restriction changing in the direction of expanding the set of possible options (alternatives) of choice.

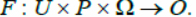

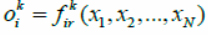

We denote by U the set of control parameters, P – the set of parameter vectors, and Ω – the set of external perturbation vectors. The state situation model of purposeful choice has a description by displaying F of the form:

(1)

(1)

The relation (1) is the domain model, which is the basis for the agent's representation of the control object functioning.

We will introduce measures for the described components that will be used to evaluate the goal-oriented state.

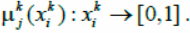

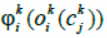

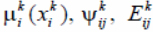

1. We assume that the agent is able to identify factors – characteristics of the environment  . The agent evaluates the influence of each factor using a linguistic variable

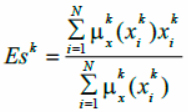

. The agent evaluates the influence of each factor using a linguistic variable  Let's enter a parameter that the agent uses to evaluate their situational awareness in a goal-oriented situation

Let's enter a parameter that the agent uses to evaluate their situational awareness in a goal-oriented situation

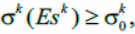

You can define the following restriction:

where – some threshold level of the agent awareness from using its own information sources.

2. We will assume that to describe the influence of the selected factors on the results,  the agent uses an approximation in the form of production rules, which have the form:

the agent uses an approximation in the form of production rules, which have the form:

where R – the number of production rules, r – the number of the current production rule,  – a clear function that reflects the agent's view of the causal relationship between input factors and possible results for the r – rule;

– a clear function that reflects the agent's view of the causal relationship between input factors and possible results for the r – rule;  – fuzzy variables defined on

– fuzzy variables defined on  .

.

As a function  can be used, for example, as mathematical models, verbal descriptions, graphs, tables, algorithms, etc.

can be used, for example, as mathematical models, verbal descriptions, graphs, tables, algorithms, etc.

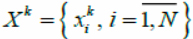

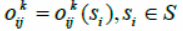

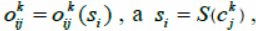

Since ckj is a function of the parameters of the external environment taken into account, properties of the system, a set of assumptions about their possible values form a possible state scenario of the system environment functionality. Implementing scenarios, for example, using rules (2) allows you to form an idea of possible results oki.

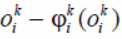

Ambiguity in choosing a method of action can be described as the degree of confidence that it is necessary to use it to get a result oki. This estimate can be described by a linguistic variable

This measure is the agent individual characteristic, which may change as a result of training and experience, as well as a result of communication between agents and the operator.

where Ik – information that the agent has at the time tk.

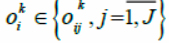

3. The action method choice when making a decision by an agent in a purposeful state situation to achieve a result is associated, as in [6, 14], with the quantitative assessment construction of the chosen solution properties. The list of properties and parameters forms on the basis of experience, knowledge, intelligence, and depth of understanding of the decision-making situation. The correct description of the properties and parameters of the action method is one of the main conditions that the choice ckj will lead to a result oki. The list choice of properties and parameters that characterize them depends entirely on the agent (its personality). This is the agent's contribution to the decision-making process. Let's present the possible results for a given agent selection environment as  , where okij– multiple possible results when selecting the j action method

, where okij– multiple possible results when selecting the j action method  – a set of results taken into account by the k agent. It is obvious that

– a set of results taken into account by the k agent. It is obvious that  .

.

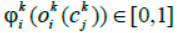

4. The value of the results  . Since,

. Since,  the value of the I type of result can be estimated by the following linguistic variable

the value of the I type of result can be estimated by the following linguistic variable  . The function

. The function  for the result oki will be a monotone transformation, since

for the result oki will be a monotone transformation, since  translates the scope of the function values oki(ckj) into the set of a linguistic variable values. Since the base value of a linguistic variable corresponds to fuzzy variables,this transformation converts the function's value domain to the value domain oki of the base fuzzy variables.

translates the scope of the function values oki(ckj) into the set of a linguistic variable values. Since the base value of a linguistic variable corresponds to fuzzy variables,this transformation converts the function's value domain to the value domain oki of the base fuzzy variables.

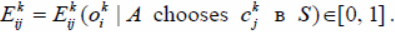

5. The method effectiveness of action from the result viewpoint is the certainty of obtaining a given result by this method of action at the known (or assumed) cost of its implementation. Confidence degree Ekij that some method of action ckj will lead to a result oki in the environment S, if the agent chooses it:  .

.

It is a linguistic variable and expresses the agent's individual assessment of the choice cost implications.

Agent selection model

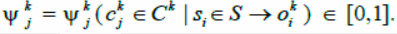

The three linguistic variables  introduced form the agent's perception model of a purposeful choice situation. Since ckj can have a description in terms of Xkj and the agent has an idea of the dependency

introduced form the agent's perception model of a purposeful choice situation. Since ckj can have a description in terms of Xkj and the agent has an idea of the dependency

in the form of a rule base that binds ckj and the value of the possible i result oki, then you can determine the value of a purposeful state by the i result oki for the k agent according to the rule [6, 14]:

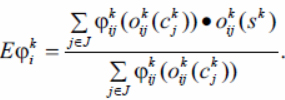

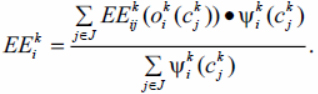

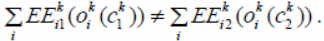

By analogy, we can estimate the value of the goal-oriented state for the k agent in terms of efficiency for the i type of result:

The agent's assessment of the goal-oriented state desirability based on the i-result and its achievement effectiveness in a choice situation is a linguistic variable

The validity of this statement follows from the definition introduced by Zadeh [11] on the types of fuzzy sets, according to which a fuzzy set is a set of type n, n =1, 2, 3, ..., if the values of its membership function are fuzzy sets of type n–1.

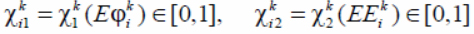

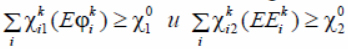

You can define the following restrictions:

where  – the agent's expectations of mission completion, which reflect the balance between costs and results achieved oki.

– the agent's expectations of mission completion, which reflect the balance between costs and results achieved oki.

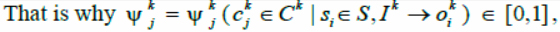

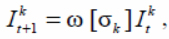

Since si is a function of awareness k purposeful agent  if there is an iterative procedure for exchanging views between agents, then the following assumption is true

if there is an iterative procedure for exchanging views between agents, then the following assumption is true

where t – iteration number for the interactive generation of a consistent forecast. This is an assumption about the growth of the k agent's awareness depending on the iteration number. ω – iterative mapping (in general, point-multiple mapping) such that at the initial level of awareness Iko, any sequence generated by including  will be bounded and all its limit points are in

will be bounded and all its limit points are in  . The validity of this assumption follows from the fact that the agent, in the process of communication and analysis, forms a certain point of view that is stable by conviction. A parameter is a characteristic of an agent's ability to perceive new points of view and revise the structure of their awareness. Implementing this parameter allows you to produce changes in one or more components or parameters of views in the process of communication or interactive interaction to cause the transformation of the model of the agent selection situation.

. The validity of this assumption follows from the fact that the agent, in the process of communication and analysis, forms a certain point of view that is stable by conviction. A parameter is a characteristic of an agent's ability to perceive new points of view and revise the structure of their awareness. Implementing this parameter allows you to produce changes in one or more components or parameters of views in the process of communication or interactive interaction to cause the transformation of the model of the agent selection situation.

Thus, the purposeful agent contribution to the choice situation is:

♦ In assessing the significance of the situational factors  and through them to inform you about

and through them to inform you about

the situation in the form (3). In evaluating the result value  .

.

♦ In assessments of the degree of applicability of using the j method of action to achieve the i result  .

.

♦ In evaluating the effectiveness  of achieving results oki of achieving the result of the j method

of achieving results oki of achieving the result of the j method

of action ckj, by which the agent estimates its own costs for obtaining the result.

The first and fourth groups of assessments reflect the agent's knowledge of the subject area, their various type level of training (skills, etc.).

The second and third groups allow us to describe the agent value system and, in principle, to assess the value congruence degree of the agent and the system, which largely determine its work quality.

The agent's choice situation model is a set of structural and functional properties that he believes the choice situation has and that he believes affect his satisfaction or dissatisfaction with the situation.

There is another factor group that determines the result implementation: will, risk-taking, self-esteem, motivation. These factors allow us to speak about such an indicator as confidence in getting a result oki in a choice situation  when using one of the possible action methods

when using one of the possible action methods  .

.

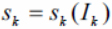

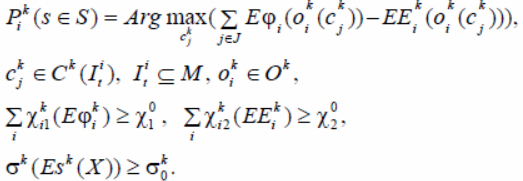

According to the hypothesis of rational behavior, the agent forms a decision according to

(4)

(4)

Since there is a relationship between the choice and the agent's perceptions of the choice situation, then in (4) it is necessary to include the knowledge base (3).

Correspondences (4) describe the pattern of the agent’s behavior (cyber-physical system) in an effort

to achieve the i result. The agent considers (4) as a pattern–a way to describe the problem, the principle and its solution algorithm, which often occurs, and in such a way that its solution can be used many times reinventing nothing.

From these characteristics we can assume that the evaluation of the impact factor, the degree of confidence required, the action mode choice, the value of the results, the efficiency of the action mode for each result are the four measures of personality (individuality). All other characteristics are derived from them by well-known methods of fuzzy sets theory.

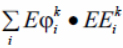

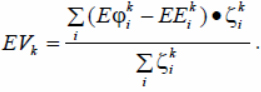

The indicators were defined above: value of a purposeful state by result  and value of a purposeful state by efficiency EEki. They are elements of an integral value indicator of a purposeful state for the k individual –

and value of a purposeful state by efficiency EEki. They are elements of an integral value indicator of a purposeful state for the k individual –  . Considering its confidence level in the achievement of the result

. Considering its confidence level in the achievement of the result  , get an indicator of the expected unit value

, get an indicator of the expected unit value

. (5)

. (5)

This means that if two subjects are in the same situation of choice, then the difference in their behavior should be manifested in values of unit value estimates for the result and effectiveness and in the degree of confidence in achieving the goal.

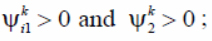

Now you can formally define the goal-oriented state of the agent. The goal-oriented state of a goal-oriented agent has the characteristic by:

• The agent is in the selection state: U(•) > 0;

• There is at least one potential result, if there are other potential results, then their values for the goal state are not equal for the result;

• There are at least two potential modes of action ck1 and ck2 such that  ;

;

• The modes of action efficiencies ck1 and ck2 such that the value estimate sum of the goal-oriented

state by the effectiveness of achievement of the results oki by these two ways are not equal  .

.

• There is at least one potential result oki, whose value to the agent is greater than a certain threshold value  , and the confidence degree in obtaining it also exceeds a certain threshold value

, and the confidence degree in obtaining it also exceeds a certain threshold value  .

.

These rules mean that there is an agent who is in a state where he wants to get any result. He has several alternative ways of achieving this, with varying effectiveness, by which he can try to achieve the desired result, and his assurance in getting the desired result is enormous.

The complexity levels of pattern models in the modeling of skills and abilities

Modeling patterns should be directed to different complexity levels of certain skills and abilities [15, 16].

Simple behavioral patterns include specific, concrete, directly observable actions that take a short time

to complete, such as avoiding obstacles.

Simple cognitive patterns include specific, easily identifiable, and verifiable mental processes that take

a short time to complete. This includes memorizing the names of environmental objects (external and internal), forming an ontology of concepts, creating an image of a situation, assessment, norm, and so on. Such patterns of thinking are identified by the easily observable and measurable behavioral results of the bearers of these patterns, as well as through direct feedback.

Simple linguistic patterns include recognizing and using commands that contain specific keywords, phrases, being able to form idiosyncratic needs, recognizing and responding to commands, reviewing or rejecting key commands, and so on. Using these skills is also available for direct observation and measurement.

Complex behavioral (or interactive) patterns include establishing and coordinating a sequence or combination of simple behavioral actions.

Complex cognitive patterns need a synthesis or sequencing of other simple thinking skills. Examples of using complex cognitive patterns are diagnosing a problem or current situation, creating a simulation program for the development of a situation or action program, and so on.

Complex linguistic patterns demand interactive use of language in highly dynamic situations. Examples of abilities that require complex linguistic skills are persuading someone of something, negotiating, and so on.

Approach to identifying and building a pattern model

It is possible to allocate four basic perceptual positions from which the collection and interpretation of information: the first position (your own point of view of the person), second position (perception of the situation from the point of view of another person), third position (the situation from the point of view of a disinterested observer), fourth position of perception implies the situation from the point of view of systems involved in a situation [15, 16].

Modeling from the first position is to try to do something yourself and explore its way of doing it. The analysis of the method of action has the fulfillment of the researcher's point of view.

It is important to emphasize that to obtain such a description by an agent of already performed activities, the subject in question must leave its previous position of activity and move to a new position, external both to the actions already performed and to the future, projected activities. This is called the first-level reflection: the agent's new position, characterized relative to the previous position, will be called a reflexive position, and the knowledge generated in it will be reflexive knowledge, since it is taken relative to the knowledge developed in the first position.

The second position assumes a complete imitation of the agent's behavior when the researcher tries to think and act as closely as possible to the agent's thoughts and actions. This approach allows us to understand on an intuitive level the essential but unconscious aspects of the thoughts and actions of the simulated agent. Modeling from the third position consists in observing the behavior of the simulated agent as a disinterested observer. The third involves building a model of the method of action from the point of view of a specific scientific discipline related to the subject area of the agent. The fourth position assumes a kind of intuitive synthesis of all the received representations in order to obtain a model characterized by the maximum values of indicators of specific value for the result and efficiency.

One of the goals of modeling is to identify and identify unconscious competence and bring it to the conscious mind in order to better understand, improve, and transfer the skill.

Cognitive and behavioral competence can be modeled either "implicitly" or "explicitly". Implicit modeling assumes taking a second position in relation to the subject of modeling, in order to achieve an intuitive understanding of the subjective experiences of this person. Explicit modeling consists of moving to the third position in order to describe the explicit structure of the simulated agent's experiences so that it can be passed on to others. Implicit modeling is primarily an inductive process by which we accept and perceive the structures of the world around us. Explicit modeling is essentially a deductive process by which we describe and implement this perception. Both processes are necessary for successful modeling. Without an "implicit" stage, there can be no effective intuitive basis on which to build an "explicit" model. On the other hand, without an "explicit" phase, modeled information cannot be translated into techniques or tools and transmitted to others.

The result should be a model that synthesizes: a) an intuitive understanding of the agent's abilities, b) direct observations of the agent's work, and C) the researcher's explicit knowledge of the agent's subject area.

The main simulation phases

They indicate a movement from implicit modeling to explicit modeling.

Preparation. It implies choosing a person who has the ability to be modeled, and determining: a) the context of modeling; b) the place and time of access to the modeled person; C) the preferred relationship with the modeled person; d) the state of the researcher in the modeling process.

In addition, this phase includes the creation of favorable conditions that will allow you to be fully immersed in the process.

Phase 1. Intuitive understanding. The first phase of the modeling process links involving the person being modeled in the desired activity or ability in the appropriate context. The simulation begins with taking the "second position" in order to come to an intuitive understanding of the skill that the agent demonstrates. There are no attempts to define patterns here. You need to enter the state of the model and internally identify yourself with it, mentally simulating the agent’s actions. The agent external behavior is a surface structure. The second position allows you to get information about the underlying structure. This is the reflection phase of representations of the simulated agent or the phase of "unconscious understanding".

As soon as a person has a sufficient level of intuitive understanding of the ability being studied, it is necessary to create a context in which to use this ability and apply it, acting as an agent does. Then he must try to achieve the same result by being "yourself". This way you will get a "double description" of the skill being modeled. The first phase of modeling ends when the person gets approximately the same results as the agent gets.

Phase 2. Abstracting. The next stage of the modeling process is to separate the essential elements of the model behavior from random, extraneous ones. At this stage, the modeled strategies and behaviors become more explicit. Since the ability to achieve results similar to the agent's results, it is necessary to build a method of action based on the researcher's views to achieve the same results, using the "first position" for this purpose.

The problem is to identify and define the specific cognitive and behavioral steps necessary to achieve the desired result in the selected context (s). For this, use the technique of "bracketing" elements of identified strategies or behaviors to assess their significance. If the reactions remain unchanged in the absence of any element, it means that it is not essential for the model. If due to the omission of an element the result changes, it means that something important has been established. The purpose of this procedure is to reduce the simulated actions to the simplest and most elegant forms, as well as to highlight the essential.

At the end of this stage, a "minimal model" of understanding the agent's ability in itself will be obtained

(i.e. from the "first position") and developed an intuitive understanding of the abilities of the agent from the "second position". In addition, there will be a "third position" – an angle in which the difference between the identified way of reproducing the simulated ability and how the person himself displays this ability will be visible.

Phase 3. Integration. The final stage of modeling involves constructing a context and procedures that would allow others to master the skills you have modeled, and therefore get the same results as the person who served as the model. In order to make the required plan, it is necessary to synthesize the information received in all positions of perception. In contrast to simple (step-by-step) simulation, imitation to the actions of the simulated person, the most effective is the creation of the appropriate reference experience for students, which allows them to detect and develop the "sequence of operations" necessary for the successful implementation of the skill. To get this skill, it's not obligatory to go through the entire simulation procedure. Again, the guiding principle at this stage is the "usefulness" of the modes of action for the agents that the model is designed for.

The described provisions are the basis for the development of a methodology for constructing models of behavior patterns of Autonomous underwater vehicles when performing search and rescue missions. A modeling complex for testing algorithms for using AUUV groups based on patterns has been developed. A variant of building an AUUV architecture with an onboard control system using pattern models and a logical output system based on patterns is proposed.

Referenses

1. Bakhanov L.E., Davydov A.N., Kornienko V.N., Slatin V.V., Fedoseev E.P., Fedosov E.A., Fedunov B.E., Shirokov L.E. Fighter Weapon Control Systems. Basics of Multirole Aircraft Intellect . Moscow, Mashinostroenie. 2005. 400 p. (in Russ.).

2. Fedunov B.E. The problems of development of on-board promptly advising expert systems. J. Comp. and Syst. Sci. Int., 1996, no. 5, pp. 147–159 (in Russ.).

3. Fedunov B.E. On-board intelligent systems of the tactical level for anthropocentric objects (examples for manned vehicles) . Moscow, 2018, 246 p. (in Russ.).

4. Fedunov B.E., Prokhorov M.D. Conclusion on the precedent in the knowledge bases of on-board intelligent systems. Artificial intelligence and decision making. 2010, no. 3, pp. 63–72 (in Russ.).

5. Gorodetsky V.I., Samoylov V.V., Trotsky D.V. The Basic ontology of collective behavior of Autonomous agents and its extensions. J. Comp. and Syst. Sci. Int., 2015, no. 5, pp. 102-121 (in Russ.).

6. Fedunov B.E. Constructive semantics for the development of algorithms for onboard intelligence of anthropocentric objects. J. Comp. and Syst. Sci. Int., 1998, no. 5, pp. 796-806 (in Engl.).

7. Vinogradov G. P. Modeling of decision – making by the intelligent agent. Software & Systems, 2010, no. 3, pp. 45-51 (in Russ.).

8. Vinogradov G.P., Kuznetsov V.N. Modeling agent behavior based on subjective representations

of the choice situation. Artificial Intelligence and Decision Making. 2011, no. 3, pp. 58-72 (in Russ.).

9. Vinogradov G.P., Kirsanova N.V., Fomina E.E. Theoretic-game model of purposeful subjective rational choice. Neuroinformatics, 2017, vol. 10, no. 1. pp. 1-12 (in Russ.). Available at: http://www.niisi.ru/iont/ni/Journal/V10/N1/Vinogradov_et_al.pdf (accessed March 03, 2020).

10. Gribov V.F., Fedunov B.E. The on-board information intelligent system "Situational awareness

of the crew" for combat aircraft. Proc. GosNIIAS, VA series, 2010, iss. 1, pp. 5-16 (in Russ.).

11. Fedunov B.E. Mechanisms of output in the knowledge base of on-board operational consulting expert systems. J. Comp. and Syst. Sci. Int . 2002, no. 4, pp. 42-52 (in Engl.).

12. Zadeh L. The concept of a linguistic variable and its application to making approximate reasoning. Information Sciences , 1975, vol. 1, pp. 119-249 (Russ. ed.: Moscow, 1976, 167 p.).

13. Fedunov B.E., Shestopalov E.V. The shell of the on-board operationally advising expert system for a typical flight situation "Entering a group into a dogfight". J. Comp. and Syst. Sci. Int. 2010, no. 3, pp. 88–105.

14. Vinogradov G.P. A subjectve rational choice. J. Physics: Conf. Series, 2017, vol. 803. DOI: 10.1088/1742-6596/803/1/012176 (in Engl.).

15. Borisov P.A., Vinogradov G.P., Semenov N.A. Integration of neural network algorithms, models of nonlinear dynamics and fuzzy logic methods in forecasting problems J. Comp. and Syst. Sci. Int, 2008, no. 1. pp. 78–84 (in Russ.).

16. Dilts R. Modeling with NLP. Meta Publ., Capitola, CA, 1998 (Russ. ed.: St. Petersburg, 2012, 206 p.).

17. Dilts R. Changing Beliefs Systems with NLP, Meta Publ., Capitola, СA, 1990 (Russ. ed.: Moscow, 1997, 192 p.).

УДК 004.81

DOI: 10.15827/2311-6749.20.2.2

Системы управления на основе паттернов

Г.П. Виноградов 1, 2 , д.т.н., профессор, заведующий лабораторией, wgp272ng@mail.ru

1 Тверской государственный технический университет, г. Тверь, 170023, Россия

2 НИИ «Центрпрограммсистем», г. Тверь, 170024, Россия

Требование интеллектуализации поведения искусственных сущностей заставляют пересмотреть логические и математические абстракции, положенные в основу построения их бортовых систем управления. Актуальной является проблема разработки таких систем на базе теории паттернов.

В работе показано, что это обеспечивает перенос эффективного опыта и обеспечивает совместимость теологического подхода и подхода, основанного на причинно-следственных связях.

В статье рассматриваются проблемы идентификации и построения моделей паттернов. Предложено для этих целей использовать четыре позиции обработки информации. Описана разработка метода логического вывода на паттернах.

Ключевые слова: принятие решений, целеустремленные системы, нечеткое суждение, ситуация выбора, АНПА.

Литература

1. Баханов Л.Е., Давыдов А.Н., Корниенко В.Н., Слатин В.В., Федосеев Е.П., Федосов Е.А., Федунов Б.Е., Широков Л.Е. Системы управления вооружением истребителей: основы интеллекта многофункционального самолета. М.: Машиностроение, 2005. 400 с.

2. Федунов Б.Е. Проблемы разработки бортовых оперативно-советующих экспертных систем // Изв. РАН. ТиСУ. 1996. № 5. С. 147–159.

3. Федунов Б.Е. Бортовые интеллектуальные системы тактического уровня для антропоцентрических объектов (примеры для пилотируемых аппаратов). М.: Де Либри, 2018. 246 с.

4. Федунов Б.Е., Прохоров М.Д. Вывод по прецеденту в базах знаний бортовых интеллектуальных систем // Искусственный интеллект и принятие решений. 2010. № 3. С. 63–72.

5. Городецкий В.И., Самойлов В.В., Троцкий Д.В. Базовая онтология коллективного поведения автономных агентов и ее расширения // Изв. РАН. ТиСУ. 2015. № 5. С. 102–121.

6. Fedunov B.E. Constructive semantics of anthropocentric systems for development and analysis of specifications for onboard intelligent systems // Изв. РАН. ТиСУ. 1998. № 5. С. 796-806 (англ.).

7. Виноградов Г.П. Моделирование принятия решений интеллектуальным агентом // Программные продукты и системы. 2010. № 3. С. 45–51.

8. Виноградов Г.П., Кузнецов В.Н. Моделирование поведения агента с учетом субъективных представлений о ситуации выбора // Искусственный интеллект и принятие решений. 2011. № 3. С. 58–72.

9. Виноградов Г.П., Кирсанова Н.В., Фомина Е.Е. Теоретико-игровая модель целенаправленного субъективно рационального выбора // Нейроинформатика. 2017. Т. 10. № 1. С. 1–12. URL: http://www.niisi.ru/iont/ni/Journal/V10/N1/Vinogradov_et_al.pdf (дата обращения 18.03.2020).

10. Грибов В.Ф., Федунов Б.Е. Бортовая информационная интеллектуальная система «Ситуационная осведомленность экипажа» для боевых самолетов // Труды ГосНИИАС: Вопросы авионики. 2010. Вып. 1. С. 5–16.

11. Fedunov B.E. Inference technique in knowledge bases of onboard operative advising expert systems // Изв. РАН. ТиСУ. 2002. № 4. С. 42-52 (англ.).

12. Заде Л. Понятие лингвистической переменной и ее применение к принятию приближенных решений. М.: Радио и связь, 1976. 167 с.

13. Федунов Б.Е., Шестопалов Е.В. Оболочка бортовой оперативно советующей экспертной системы для типовой ситуации полета «Ввод группы в воздушный бой» // Изв. РАН. ТиСУ. 2010. № 3. С. 88–105.

14. Vinogradov G.P. A subjectve rational choice. J. Physics: Conf. Series, 2017, vol. 803. DOI: 10.1088/1742-6596/803/1/012176.

15. Борисов П.А., Виноградов Г.П., Семенов Н.А. Интеграция нейросетевых алгоритмов, моделей нелинейной динамики и методов нечеткой логики в задачах прогнозирования // Изв. РАН. ТиСУ. 2008. № 1. С. 78–84.

16. Дилтс Р. Моделирование с помощью НЛП. СПб: Питер, 2012. 206 с.

17. Дилтс Р. Изменение убеждений с помощью НЛП. М.: Класс, 1997. 192 с.

Комментарии